43 label encoding vs one hot encoding

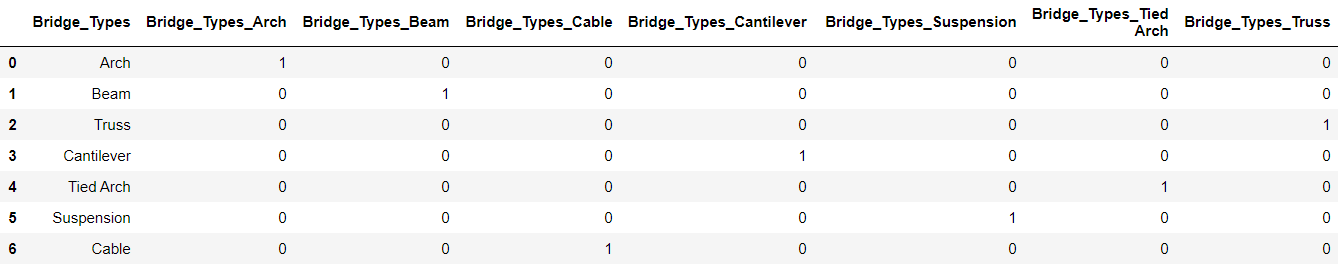

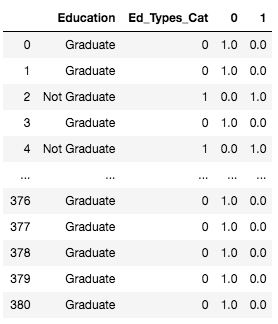

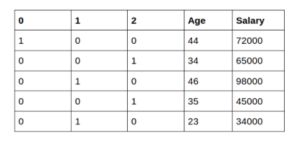

One hot encoding vs label encoding (Updated 2022) - Stephen Allwright That answer depends very much on your context, however given that One Hot Encoding is possible to use across all machine learning models whilst the Label Encoding tends to only work best on tree based models, I would always suggest to start with One Hot Encoding and look at Label Encoding if you see a specific need. contactsunny.medium.com › label-encoder-vs-one-hotLabel Encoder vs. One Hot Encoder in Machine Learning Jul 29, 2018 · What one hot encoding does is, it takes a column which has categorical data, which has been label encoded, and then splits the column into multiple columns. The numbers are replaced by 1s and 0s, depending on which column has what value. In our example, we’ll get three new columns, one for each country — France, Germany, and Spain.

PyTorch One Hot Encoding | How to Create PyTorch One Hot Encoding? - EDUCBA From the above article, we have taken in the essential idea of the PyTorch one-hot encoding, and we also see the representation and example of the PyTorch one-hot encoding. Furthermore, we learned how and when we use the PyTorch one-hot encoding from this article. Recommended Articles. This is a guide to PyTorch One Hot Encoding.

Label encoding vs one hot encoding

Comparing Label Encoding And One-Hot Encoding With Python Implementation This will provide us with the accuracy score of the model using the one-hot encoding. It can be noticed that after applying the one-hot encoder, the embarked class is assumed as C=1,0,0, Q=0,1,0 and S= 0,0,1 respectively while the male and female in the sex class is assumed as 0,1 and 1,0 respectively. The code snippet is shown below One-Hot Encoding - an overview | ScienceDirect Topics In one-hot encoding, a separate bit of state is used for each state.It is called one-hot because only one bit is “hot” or TRUE at any time. For example, a one-hot encoded FSM with three states would have state encodings of 001, 010, and 100. Each bit of state is stored in a flip-flop, so one-hot encoding requires more flip-flops than binary encoding. ML | One Hot Encoding to treat Categorical data parameters 23.08.2022 · One approach to solve this problem can be label encoding where we will assign a numerical value to these labels for example Male and Female mapped to 0 and 1.But this can add bias in our model as it will start giving higher preference to the Female parameter as 1>0 and ideally both labels are equally important in the dataset. To deal with this issue we will use One …

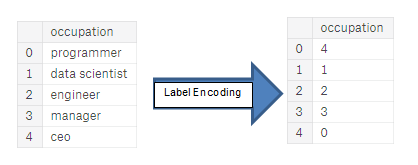

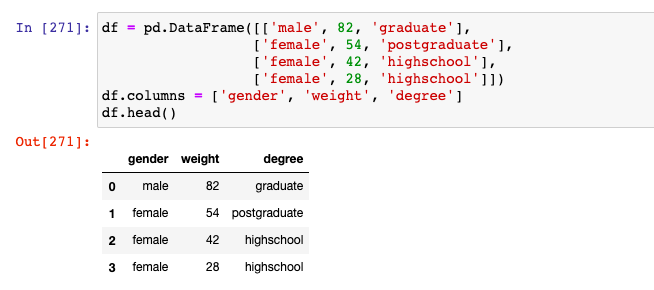

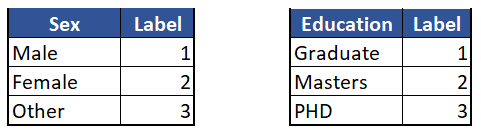

Label encoding vs one hot encoding. What are the pros and cons of label encoding categorical ... - Quora Open the VS code first. 2. Click on the Manage icon in the below left. 3. Then click on the Settings option or press shortcut key ctrl + , 4. Search 'Run In Terminal' and scroll down then you will see 'Code-runner: Run In Terminal' 5. Click on the box icon. After this 'Run In Terminal' setting is turned on. Then reopen your Visual Studio Code. › ml-one-hot-encoding-ofML | One Hot Encoding to treat Categorical data parameters Aug 23, 2022 · One Hot Encoding using Sci-kit learn Library: One hot encoding algorithm is an encoding system of Sci-kit learn library. One Hot Encoding is used to convert numerical categorical variables into binary vectors. Before implementing this algorithm. Make sure the categorical values must be label encoded as one hot encoding takes only numerical ... Feature Engineering: Label Encoding & One-Hot Encoding - Fizzy The categorical data are often requires a certain transformation technique if we want to include them, namely Label Encoding and One-Hot Encoding. Label Encoding. What the Label Encoding does is transform text values to unique numeric representations. For example, 2 categorical columns "gender" and "city" were converted to numeric values, a ... ML | Label Encoding of datasets in Python - GeeksforGeeks 23.08.2022 · In machine learning, we usually deal with datasets that contain multiple labels in one or more than one columns. These labels can be in the form of words or numbers. To make the data understandable or in human-readable form, the training data is often labelled in words. Label Encoding refers to ...

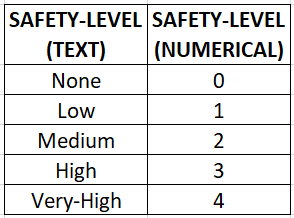

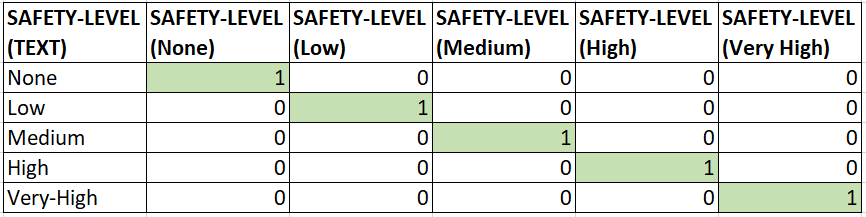

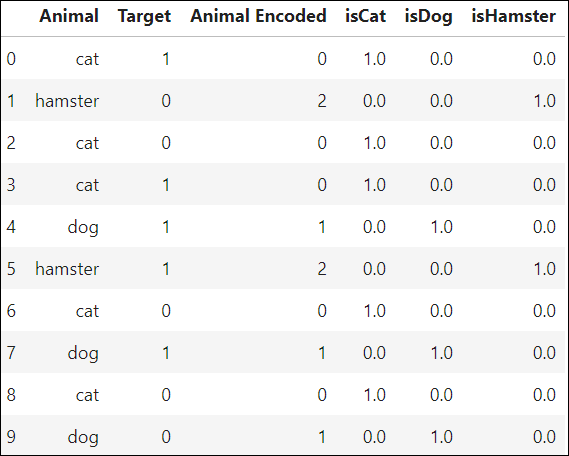

label encoding vs one hot encoding | Data Science and Machine Learning ... In label encoding, we label the categorical values into numeric values by assigning each category to a number. Say, our categories are "pink" and "white" in label encoding we will be replacing 1 with pink and 0 with white. This will lead to a single numerically encoded column. Whereas in one-hot encoding, we end up with new columns. Machine learning feature engineering: Label encoding Vs One-Hot ... In this tutorial, you will learn how to apply Label encoding & One-hot encoding using Scikit-learn and pandas. Encoding is a method to convert categorical va... Label Encoding vs One Hot Encoding | by Hasan Ersan YAĞCI | Medium Label Encoding and One Hot Encoding 1 — Label Encoding Label encoding is mostly suitable for ordinal data. Because we give numbers to each unique value in the data. If we use label encoding in... Label Encoding vs. One Hot Encoding | Data Science and Machine Learning ... One-Hot Encoding One-Hot Encoding transforms each categorical feature with n possible values into n binary features, with only one active. Most of the ML algorithms either learn a single weight for each feature or it computes distance between the samples. Algorithms like linear models (such as logistic regression) belongs to the first category.

hackernoon.com › what-is-one-hot-encoding-why-andWhat is One Hot Encoding? Why and When Do You Have to Use it? Aug 02, 2017 · One hot encoding is a process by which categorical variables are converted into a form that could be provided to ML algorithms to do a better job in prediction. So, you’re playing with ML models and you encounter this “One hot encoding” term all over the place. Label Encoder vs. One Hot Encoder in Machine Learning 29.07.2018 · One Hot Encoder. If you’re interested in checking out the documentation, you can find it here. Now, as we already discussed, depending on the data we have, we might run into situations where, after label encoding, we might confuse our model into thinking that a column has data with some kind of order or hierarchy, when we clearly don’t have ... towardsdatascience.com › encoding-categoricalEncoding Categorical Variables: One-hot vs Dummy Encoding Dec 16, 2021 · One-hot encoding. In one-hot encoding, we create a new set of dummy (binary) variables that is equal to the number of categories (k) in the variable. For example, let’s say we have a categorical variable Color with three categories called “Red”, “Green” and “Blue”, we need to use three dummy variables to encode this variable using ... Xgboost with Different Categorical Encoding Methods Currently, there are many different categorical feature transform methods, in this post, four transform methods are listed: 1. Target encoding: each level of categorical variable is represented by a summary statistic of the target for that level. 2. One-hot encoding: assign 1 to specific category and 0 to other category and transform ...

Difference between Label Encoding and One Hot Encoding - H2S Media Conclusion Use Label Encoding when you have ordinal features present in your data to get higher accuracy and also when there are too many categorical features present in your data because in such scenarios One Hot Encoding may perform poorly due to high memory consumption while creating the dummy variables.

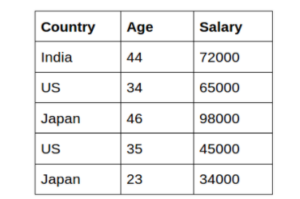

Choosing the right Encoding method-Label vs OneHot Encoder 08.11.2018 · What one hot encoding does is, it takes a column which has categorical data, which has been label encoded and then splits the column into multiple columns. The numbers are replaced by 1s and 0s, depending on which column has what value. In our example, we’ll get four new columns, one for each country — Japan, U.S, India, and China. For rows which have the …

Categorical Data Encoding with Sklearn LabelEncoder and ... - MLK Label Encoding vs One Hot Encoding. Label encoding may look intuitive to us humans but machine learning algorithms can misinterpret it by assuming they have an ordinal ranking. In the below example, Apple has an encoding of 1 and Brocolli has encoding 3. But it does not mean Brocolli is higher than Apple however it does misleads the ML algorithm.

One Hot Encoding VS Label Encoding | by Prasant Kumar | Medium There we use Label Encoders for encoding because they replace them with labels that are comparable with each other. Taking the example of Satisfaction rating replacing "extremely dislike"- 0,...

towardsdatascience.com › choosing-the-rightChoosing the right Encoding method-Label vs OneHot Encoder Nov 08, 2018 · Let us understand the working of Label and One hot encoder and further, we will see how to use these encoders in python and see their impact on predictions. Label Encoder: Label Encoding in Python can be achieved using Sklearn Library. Sklearn provides a very efficient tool for encoding the levels of categorical features into numeric values.

Ordinal and One-Hot Encodings for Categorical Data Encoding Categorical Data There are three common approaches for converting ordinal and categorical variables to numerical values. They are: Ordinal Encoding One-Hot Encoding Dummy Variable Encoding Let's take a closer look at each in turn. Ordinal Encoding In ordinal encoding, each unique category value is assigned an integer value.

One hot Encoding with multiple labels in Python? - ProjectPro 02.05.2022 · One hot Encoding with multiple labels in Python? One hot Encoding with multiple labels in Python Last Updated: 02 May 2022. ... can compare numbers and will give different weightage to different labels and as a result it will be bias towards a label. So what we can do is we can make different columns acconding to the labels and assign bool values in it. …

medium.com › analytics-vidhya › target-encoding-vsTarget Encoding Vs. One-hot Encoding with Simple Examples One-hot encoding is easier to conceptually understand. This type of encoding simply "produces one feature per category, each binary." Or for the example above, creating a new feature for cat, dog,...

What is One Hot Encoding? Why and When Do You Have to Use it? 02.08.2017 · One hot encoding is a process by which categorical variables are converted into a form that could be provided to ML algorithms to do a better job in prediction. So, you’re playing with ML models and you encounter this “One hot encoding” term all over the place. You see the sklearn documentation for one hot encoder and it says “ Encode categorical integer features …

Target Encoding Vs. One-hot Encoding with Simple Examples 16.01.2020 · One-hot encoding is easier to conceptually understand. This type of encoding simply “produces one feature per category, each binary.” Or for the example above, creating a new feature for cat ...

Encoding Categorical Variables: One-hot vs Dummy Encoding 16.12.2021 · In one-hot encoding, we create a new set of dummy (binary) variables that is equal to the number of categories (k) in the variable. For example, let’s say we have a categorical variable Color with three categories called “Red”, “Green” and “Blue”, we need to use three dummy variables to encode this variable using one-hot encoding. A dummy (binary) variable …

Label Encoding in Python - Javatpoint One-hot Encoding; Ordinal Encoding; However, we will be covering Label Encoding only throughout this tutorial: Understanding Label Encoding. In Python Label Encoding, we need to replace the categorical value using a numerical value ranging between zero and the total number of classes minus one. For instance, if the value of the categorical ...

Label Encoding vs. One Hot Encoding: What's the Difference? One Hot Encoding In most scenarios, one hot encoding is the preferred way to convert a categorical variable into a numeric variable because label encoding makes it seem that there is a ranking between values. For example, consider when we used label encoding to convert team into a numeric variable:

› one-hot-encodingOne-Hot Encoding - an overview | ScienceDirect Topics One important decision in state encoding is the choice between binary encoding and one-hot encoding. With binary encoding, as was used in the traffic light controller example, each state is represented as a binary number. Because K binary numbers can be represented by log 2 K bits, a system with K states needs only log 2 K bits of state.

Categorical Encoding | One Hot Encoding vs Label Encoding The number of categorical features is less so one-hot encoding can be effectively applied. We apply Label Encoding when: The categorical feature is ordinal (like Jr. kg, Sr. kg, Primary school, high school) The number of categories is quite large as one-hot encoding can lead to high memory consumption.

ML | One Hot Encoding to treat Categorical data parameters 23.08.2022 · One approach to solve this problem can be label encoding where we will assign a numerical value to these labels for example Male and Female mapped to 0 and 1.But this can add bias in our model as it will start giving higher preference to the Female parameter as 1>0 and ideally both labels are equally important in the dataset. To deal with this issue we will use One …

One-Hot Encoding - an overview | ScienceDirect Topics In one-hot encoding, a separate bit of state is used for each state.It is called one-hot because only one bit is “hot” or TRUE at any time. For example, a one-hot encoded FSM with three states would have state encodings of 001, 010, and 100. Each bit of state is stored in a flip-flop, so one-hot encoding requires more flip-flops than binary encoding.

Comparing Label Encoding And One-Hot Encoding With Python Implementation This will provide us with the accuracy score of the model using the one-hot encoding. It can be noticed that after applying the one-hot encoder, the embarked class is assumed as C=1,0,0, Q=0,1,0 and S= 0,0,1 respectively while the male and female in the sex class is assumed as 0,1 and 1,0 respectively. The code snippet is shown below

Post a Comment for "43 label encoding vs one hot encoding"